Ioannis Mitliagkas

Biographie

Ioannis Mitliagkas (Γιάννης Μητλιάγκας) est professeur associé au Département d'informatique et de recherche opérationnelle (DIRO) de l'Université de Montréal. Il est également membre académique principal à Mila – Institut québécois d’intelligence artificielle et titulaire d’une chaire en IA Canada-CIFAR. Il occupe présentement un poste de chercheur scientifique à temps partiel chez Google DeepMind à Montréal.

Auparavant, il était chercheur postdoctoral aux Départements de statistique et d'informatique de l'Université de Stanford. Il a obtenu un doctorat au Département d'ingénierie électrique et informatique de l'Université du Texas à Austin.

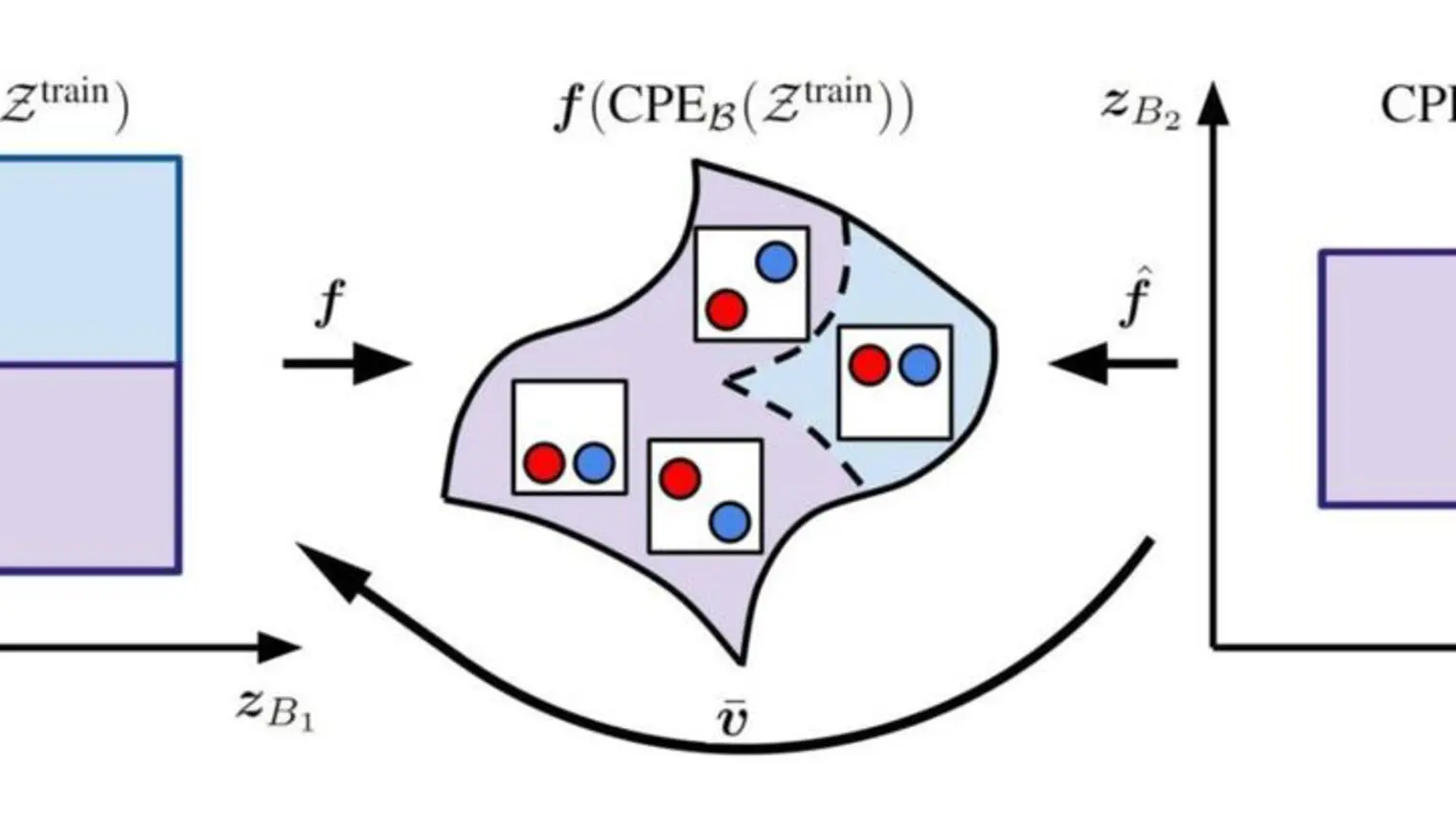

Ses recherches portent sur des sujets liés à l'apprentissage automatique, en particulier l'optimisation, la théorie de l'apprentissage profond et l'apprentissage statistique. Ses travaux récents portent notamment sur les méthodes d'optimisation efficace et adaptative, l'étude de l'interaction entre l'optimisation et la dynamique des systèmes d'apprentissage à grande échelle et la dynamique des jeux.