Ioannis Mitliagkas

Biography

Ioannis Mitliagkas (Γιάννης Μητλιάγκας) is an associate professor in the Department of Computer Science and Operations Research (DIRO) at Université de Montréal, as well as a Core Academic member of Mila – Quebec Artificial Intelligence Institute and a Canada CIFAR AI Chair. He holds a part-time position as a staff research scientist at Google DeepMind Montréal.

Previously, he was a postdoctoral scholar in the Departments of statistics and computer science at Stanford University. He obtained his PhD from the Department of Electrical and Computer Engineering at the University of Texas at Austin.

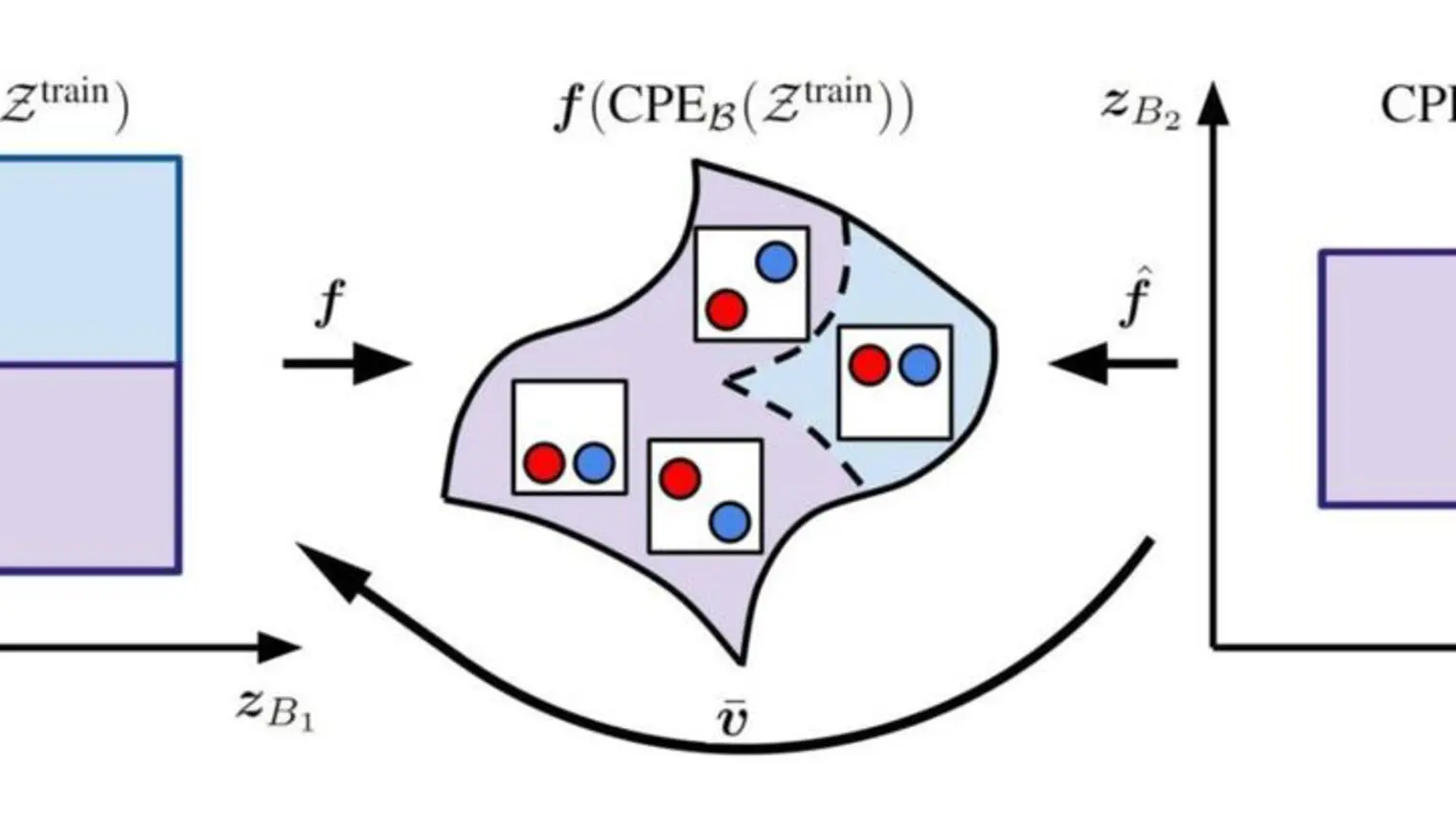

His research includes topics in machine learning, with emphasis on optimization, deep learning theory, statistical learning. His recent work includes methods for efficient and adaptive optimization, studying the interaction between optimization and the dynamics of large-scale learning systems and the dynamics of games.