First Languages AI Reality

The First Languages AI Reality (FLAIR) initiative enables the next chapter in Indigenous language revitalization with artificial intelligence (AI) and immersive technology.

The First Languages AI Reality (FLAIR) initiative enables the next chapter in Indigenous language revitalization with artificial intelligence (AI) and immersive technology.

More than 50% of the world’s languages will become extinct or seriously endangered by 2100. The extinction of a language results in the irrecoverable loss of unique cultural, historical, and ecological knowledge. Since each language is a unique expression of the human experience of the world, the knowledge it encodes may be the key to answering fundamental questions of the future.

The majority of the languages that are under threat are Indigenous languages. More than 4,000 languages are spoken by Indigenous peoples worldwide, who represent less than 6% of the global population. It is estimated that one Indigenous language dies every two weeks. Languages are central to the identity of Indigenous peoples, the preservation of their cultures, worldviews and visions and an expression of self-determination.

We envision a world in which Indigenous communities have full self-determination and sovereignty over their language and culture. We imagine technology serving the revitalization and thriving of languages as a tool to connect community members, to celebrate their identity, and to transmit their culture and knowledge on the community’s own terms.

The FLAIR initiative serves Indigenous communities in their efforts to revitalise their language and culture through technology.

We are building the foundations for Indigenous Voice AI in systems explicitly designed to respect data sovereignty and linguistic self-determination. Our foundational automatic speech recognition (ASR) research aims to develop a method for the rapid creation of custom models for endangered languages. These models can be used for language learning, audio transcription, voice-controlled technology and much more. Furthermore, Voice AI will position Indigenous communities to participate in the Metaverse in their heritage languages and facilitate intergenerational language transmission, a critical factor for language vitality. It will enable inclusive immersive experiences in which Indigenous youth can reconnect with their heritage in culturally meaningful exchanges and activities.

We are proposing a multifaceted approach that aims to reduce the data requirements drastically. The development of ASR for a new language typically requires hundreds of hours of data. For most Indigenous languages, this is usually infeasible due to the limited or non-existent audio recordings and because there are very few remaining speakers. In many cases, there are barely a dozen or no native speakers left (who tend to be of advanced age) and large data collection from these speakers is not realistic. When recorded audio exists, it is either not transcribed or inaccessible. Thus, a method to decrease the number of hours of audio data required is critical to unlocking the potential of AI for low-resource languages.

The immediate focus of FLAIR is to validate solutions for a specific set of Indigenous languages in North America. All our learning and the tools we build will be shared publicly and open-source for free use. We will next scale up to deliver the resulting system for rapid ASR development to Indigenous communities worldwide as it could help solve similar problems for the thousands of languages used by other under-resourced/underserved communities.

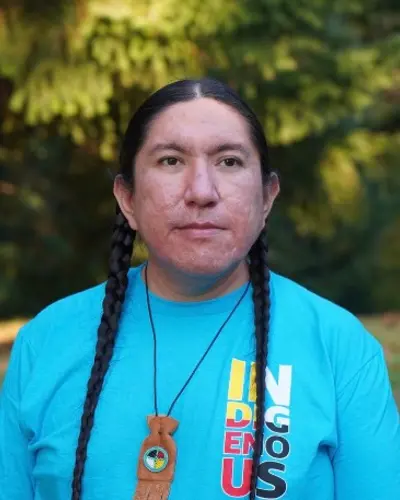

Watch FLAIR’s Technical Director, Michael Running Wolf, present his vision for the project at a TEDx event held in Boston.

The FLAIR Initiative was founded and is led by Indigenous technologists. We have grown into a coalition of technologists, language reclamation and documentation leaders, machine learning scientists, endangered language teachers and researchers, and computational linguists. FLAIR activities are also made possible thanks to the contributions of Indigenous AI students and language culture consultants.

Michael Running Wolf is a citizen of the Northern Cheyenne with Lakota and Blackfeet family ties. He was formerly an engineer for Amazon’s Alexa and a Computer Science instructor at Northeastern University. Michael is pursuing his PhD at McGill University, on sovereign AI systems for Indigenous languages.

Caroline Running Wolf is a citizen of the Crow Nation. She is pursuing her PhD at the University of British Columbia. She researches immersive technologies (AR/VR/XR) and AI to enhance Indigenous language and culture reclamation.

Dr. Shawn Tsosie (Navajo / Little Shell) is a machine learning scientist and Iraq War veteran. He holds degrees from MIT (undergraduate) and University of California, Santa Cruz (Masters/PhD) and leads the modeling work for automatic speech recognition of Indigenous languages.

Dr. Conor Quinn is a revitalization/reclamation linguist who has worked since the mid-1990s on the structure of Indigenous and polysynthetic languages. His research examines how scholarly models enable practical approaches to community language revitalization/reclamation pedagogy. He leads the development of Minimal Courses that inform data collection and serves as a pedagogical tool.