Du 13 au 19 juillet 2025, des chercheur·euse·s et membres du personnel de Mila se sont rendus à Vancouver pour assister à la quarante-deuxième Conférence internationale sur l'apprentissage automatique (ICML 2025), où elles et ils ont présenté leurs travaux à des pairs du monde entier, en plus de proposer pour la première fois des formations en vulgarisation scientifique.

Former les chercheuses et chercheurs à la vulgarisation scientifique

Depuis cette année, ICML demande aux auteur·e·s soumettant des articles scientifiques à la conférence d’inclure des résumés destinés au grand public. Dans le cadre de cette initiative, Julien Besset, conseiller principal en communication scientifique à Mila, a dirigé des ateliers de formation à la conférence, enseignant à des centaines de chercheur·e·s les principes clés de la communication et de la narration pour rendre leur travail plus engageant et accessible au public.

Plus de 40 articles scientifiques présentés à la conférence principale

Cette année, les chercheur·euse·s de Mila ont présenté plus de 40 articles de recherche à la conférence principale et près de 50 articles lors d'ateliers.

Voici une liste des articles affiliés à Mila acceptés à la conférence :

Conférence principale

- Position: Humanity Faces Existential Risk from Gradual Disempowerment - Jan Kulveit,Raymond Douglas,Nora Ammann,Deger Turan,David Krueger 0001,David Duvenaud

- Scalable First-order Method for Certifying Optimal k-Sparse GLMs - Jiachang Liu,Soroosh Shafiee, Andrea Lodi

- Locate 3D: Real-World Object Localization via Self-Supervised Learning in 3D - Paul McVay,Sergio Arnaud,Ada Martin,Arjun Majumdar,Krishna Murthy,Phillip Thomas,Ruslan Partsey,Daniel Dugas,Abha Gejji,Alexander Sax,Vincent-Pierre Berges,Mikael Henaff,Ayush Jain,Ang Cao,Ishita Prasad,Mrinal Kalakrishnan,Michael G. Rabbat,Nicolas Ballas,Mahmoud Assran,Oleksandr Maksymets,Aravind Rajeswaran,Franziska Meier

- Towards a Mechanistic Explanation of Diffusion Model Generalization - Matthew Niedoba,Berend Zwartsenberg,Kevin Patrick Murphy,Frank N. Wood

- Scaling Trends in Language Model Robustness - Nikolaus H. R. Howe,Ian R. McKenzie,Oskar John Hollinsworth,Michał Zając,Tom Tseng,Aaron David Tucker,Pierre-Luc Bacon,Adam Gleave

- Grokking Beyond the Euclidean Norm of Model Parameters - Tikeng Notsawo Pascal Junior,Pascal Notsawo,Guillaume Dumas,Guillaume Rabusseau

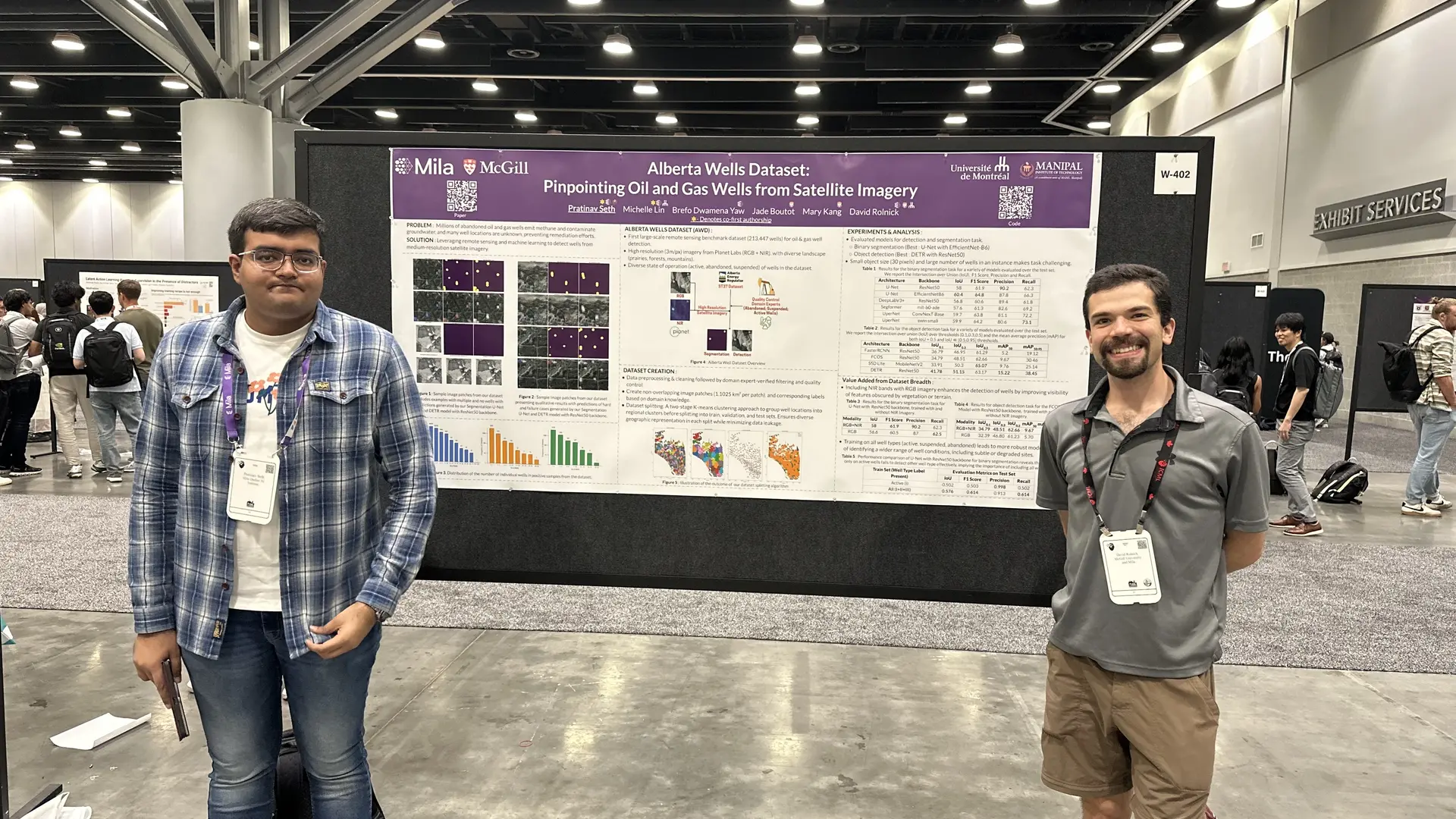

- Alberta Wells Dataset: Pinpointing Oil and Gas Wells from Satellite Imagery - Pratinav Seth,Michelle Lin,BREFO DWAMENA YAW,Jade Boutot,Mary Kang,David Rolnick

- Does learning the right latent variables necessarily improve in-context learning? - Sarthak Mittal,Eric Elmoznino,Leo Gagnon,Sangnie Bhardwaj,Dhanya Sridhar,Guillaume Lajoie

- Addressing Concept Mislabeling in Concept Bottleneck Models Through Preference Optimization - Emiliano Penaloza,Tianyue H. Zhang,Laurent Charlin,Mateo Espinosa Zarlenga

- The Courage to Stop: Overcoming Sunk Cost Fallacy in Deep Reinforcement Learning - Jiashun Liu,Johan Samir Obando Ceron,Pablo Castro,Aaron C. Courville,Ling Pan

- Mechanistic Unlearning: Robust Knowledge Unlearning and Editing via Mechanistic Localization - Phillip Huang Guo,Aaquib Syed,Abhay Sheshadri,Aidan Ewart,Gintare Karolina Dziugaite

- Galileo: Learning Global&Local Features of Many Remote Sensing Modalities - Gabriel Tseng,Anthony Fuller,Marlena Reil,Henry Herzog,Patrick Beukema,Favyen Bastani,James R Green,Evan Shelhamer,Hannah Kerner,David Rolnick

- FLAM: Frame-Wise Language-Audio Modeling - Yusong Wu,Christos Tsirigotis,Ke Chen,Cheng-Zhi Anna Huang,Aaron C. Courville,Oriol Nieto,Prem Seetharaman,Justin Salamon

- Context is Key: A Benchmark for Forecasting with Essential Textual Information - Andrew Robert Williams,Arjun Ashok,Étienne Marcotte,Valentina Zantedeschi,Jithendaraa Subramanian,Roland Riachi,James Requeima,Alexandre Lacoste,Irina Rish,Nicolas Chapados,Alexandre Drouin

- VinePPO: Refining Credit Assignment in RL Training of LLMs - Amirhossein Kazemnejad,Milad Aghajohari,Eva Portelance,Alessandro Sordoni,Siva Reddy,Aaron C. Courville,Nicolas Le Roux

- The Impact of On-Policy Parallelized Data Collection on Deep Reinforcement Learning Networks - Walter Mayor,Johan Samir Obando Ceron,Aaron C. Courville,Pablo Castro

- Generalization Bounds via Meta-Learned Model Representations: PAC-Bayes and Sample Compression Hypernetworks - Benjamin Leblanc,Mathieu Bazinet,Nathaniel D'Amours,Alexandre Drouin,Pascal Germain

- A Theoretical Justification for Asymmetric Actor-Critic Algorithms - Gaspard Lambrechts,Damien Ernst,Aditya Mahajan

- Rejecting Hallucinated State Targets during Planning - Harry Zhao,Mingde Zhao,Tristan Sylvain,Romain Laroche,Doina Precup,Yoshua Bengio

- Aligning Protein Conformation Ensemble Generation with Physical Feedback - Jiarui Lu,Xiaoyin Chen,Stephen Zhewen Lu,Aurelie Lozano,Vijil Chenthamarakshan,Payel Das,Jian Tang

- The Perils of Optimizing Learned Reward Functions: Low Training Error Does Not Guarantee Low Regret - Lukas Fluri,Leon Lang,Alessandro Abate,Patrick Forré,David Krueger,Joar Max Viktor Skalse

- Monte Carlo Tree Diffusion for System 2 Planning - Jaesik Yoon,Hyeonseo Cho,Doojin Baek,Yoshua Bengio,Sungjin Ahn

- EditLord: Learning Code Transformation Rules for Code Editing - Weichen Li,Albert Jan,Baishakhi Ray,Junfeng Yang,Chengzhi Mao,Kexin Pei

- Self-Play $Q$-Learners Can Provably Collude in the Iterated Prisoner's Dilemma - Quentin Bertrand,Juan Agustin Duque,Emilio Calvano,Gauthier Gidel

- Outsourced diffusion sampling: Efficient posterior inference in latent spaces of generative models - Siddarth Venkatraman,Mohsin Hasan,Minsu Kim,Luca Scimeca,Marcin Sendera,Yoshua Bengio,Glen Berseth,Nikolay Malkin

- Compositional Risk Minimization - Divyat Mahajan,Mohammad Pezeshki,Charles Arnal,Ioannis Mitliagkas,Kartik Ahuja,Pascal Vincent

- From Language Models over Tokens to Language Models over Characters - Tim Vieira,Benjamin LeBrun,Mario Giulianelli,Juan Luis Gastaldi,Brian DuSell,John Terilla,Timothy J. O'Donnell,Ryan Cotterell

- Mitigating Plasticity Loss in Continual Reinforcement Learning by Reducing Churn - Hongyao Tang,Johan Samir Obando Ceron,Pablo Castro,Aaron C. Courville,Glen Berseth

- Improving the Scaling Laws of Synthetic Data with Deliberate Practice - Reyhane Askari Hemmat,Mohammad Pezeshki,Elvis Dopgima Dohmatob,Florian Bordes,Pietro Astolfi,Melissa Hall,Jakob Verbeek,Michal Drozdzal,Adriana Romero

- AI for Global Climate Cooperation: Modeling Global Climate Negotiations, Agreements, and Long-Term Cooperation in RICE-N - Tianyu Zhang,Andrew Robert Williams,Phillip Wozny,Kai-Hendrik Cohrs,Koen Ponse,Marco Jiralerspong,Soham Rajesh Phade,Sunil Srinivasa,Lu Liu,Yang Zhang,Prateek Gupta,Erman Acar,Irina Rish,Yoshua Bengio,Stephan Zheng

- SafeArena: Evaluating the Safety of Autonomous Web Agents - Ada Defne Tur,Nicholas Meade,Xing Han Lu,Alejandra Zambrano,Arkil Patel,Esin DURMUS,Spandana Gella,Karolina Stanczak,Siva Reddy

- Position: Probabilistic Modelling is Sufficient for Causal Inference - Bruno Mlodozeniec,David Krueger, Richard E. Turner

- UI-Vision: A Desktop-centric GUI Benchmark for Visual Perception and Interaction - Shravan Nayak,Xiangru Jian,Kevin Qinghong Lin,Juan A. Rodriguez,Montek Kalsi,Rabiul Awal,Nicolas Chapados,M. Tamer Özsu,Aishwarya Pravin Agrawal,David Vazquez,Chris Pal,Perouz Taslakian,Spandana Gella,Sai Rajeswar,Human Annotator

- Language Models over Canonical Byte-Pair Encodings - Tim Vieira,Tianyu Liu,Clemente Pasti,Yahya Emara,Brian DuSell,Benjamin LeBrun,Mario Giulianelli,Juan Luis Gastaldi,Timothy J. O'Donnell,Ryan Cotterell

- Leveraging Per-Instance Privacy for Machine Unlearning - Nazanin Mohammadi Sepahvand,Anvith Thudi,Berivan Isik,Ashmita Bhattacharyya,Nicolas Papernot,Eleni Triantafillou,Daniel M. Roy,Gintare Karolina Dziugaite

- Discovering Symbolic Cognitive Models from Human and Animal Behavior - Pablo Castro,Nenad Tomasev,Ankit Anand,Navodita Sharma,Rishika Mohanta,Aparna Dev,Kuba Perlin,Siddhant Jain,Kyle Levin,Noemi Elteto,Will Dabney,Alexander Novikov,Glenn C Turner,Maria K Eckstein,Nathaniel D. Daw,Kevin J Miller,Kim Stachenfeld

- In-context learning and Occam's razor - Eric Elmoznino,Tom Marty,Tejas Kasetty,Leo Gagnon,Sarthak Mittal,Mahan Fathi,Dhanya Sridhar,Guillaume Lajoie

- TypyBench: Evaluating LLM Type Inference for Untyped Python Repositories - Honghua Dong,Jiacheng Yang,Xun Deng,Yuhe Jiang,Gennady Pekhimenko,Fan Long,Xujie Si

- Network Sparsity Unlocks the Scaling Potential of Deep Reinforcement Learning - Guozheng Ma,Lu Liu,Zilin Wang,Li Shen,Pierre-Luc Bacon,Dacheng Tao

- When to retrain a machine learning model - Florence Regol,Leo Schwinn,Kyle Sprague,Mark J. Coates,Thomas Markovich

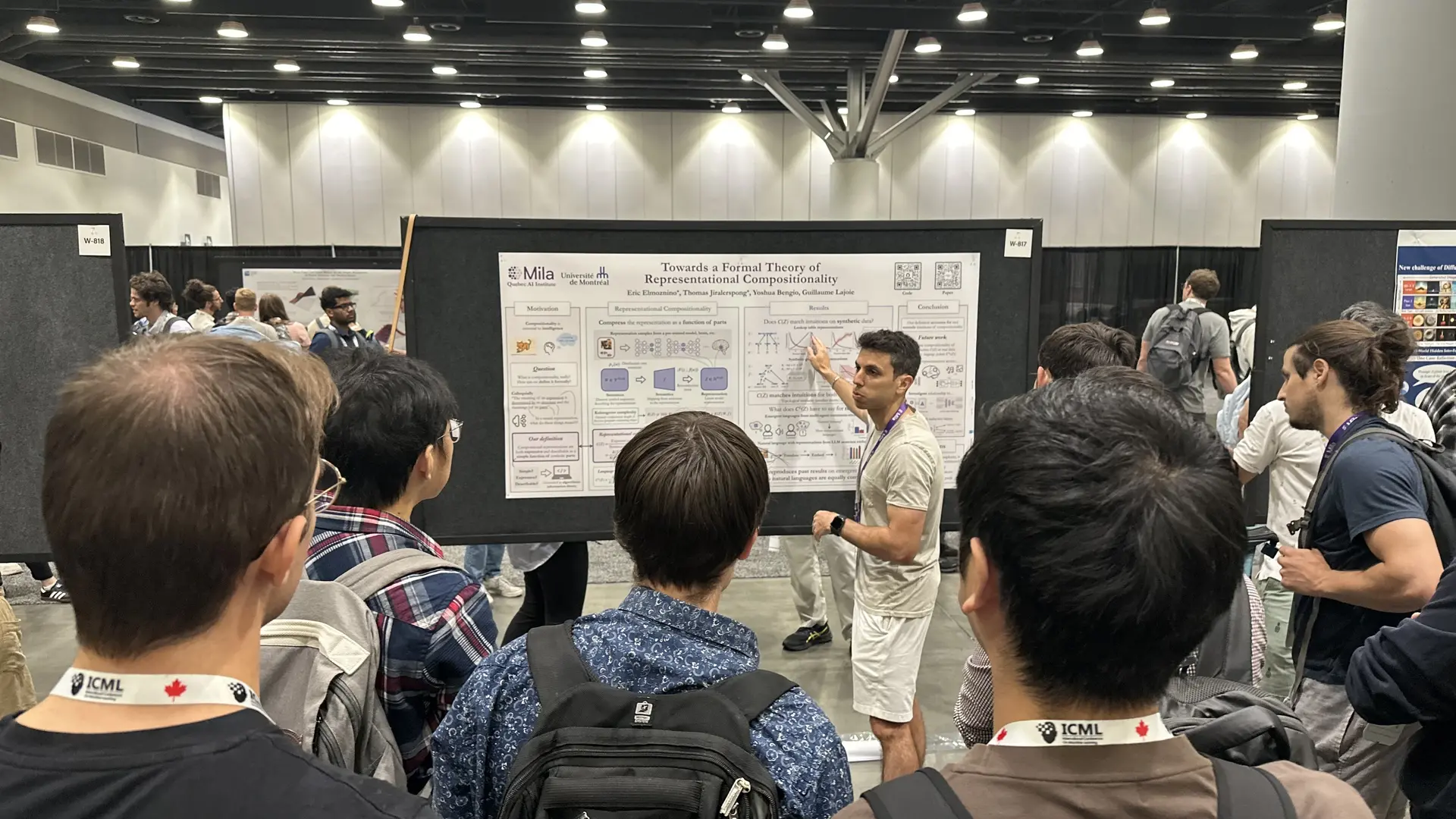

- Towards a Formal Theory of Representational Compositionality - Eric Elmoznino,Thomas Jiralerspong,Yoshua Bengio,Guillaume Lajoie

- SKOLR: Structured Koopman Operator Linear RNN for Time-Series Forecasting - Yitian Zhang,Liheng Ma,Antonios Valkanas,Boris Oreshkin,Mark J. Coates

- PoisonBench: Assessing Language Model Vulnerability to Poisoned Preference Data - Tingchen Fu,Mrinank Sharma,Philip Torr,Shay B. Cohen,David Krueger 0001,Fazl Barez

- The Butterfly Effect: Neural Network Training Trajectories Are Highly Sensitive to Initial Conditions - Devin Kwok,Gül Sena Altıntaş,Colin Raffel,David Rolnick

Ateliers

- Putting the Value Back in RL: Better Test-Time Scaling by Unifying LLM Reasoners With Verifiers - Kusha Sareen,Morgane M Moss,Alessandro Sordoni,Rishabh Agarwal,Arian Hosseini

- Boosting LLM Reasoning via Spontaneous Self-Correction - Xutong Zhao,Tengyu Xu,Xuewei Wang,Zhengxing Chen,Di Jin,Liang Tan,Yen-Ting Lin,Zishun Yu,Zhuokai Zhao,Si-Yuan Wang,Yun He,Sinong Wang,Han Fang,A. Chandar,MetaAI,Chen Zhu,Mila - Québec,AI Institute,Polytechnique Montréal

- Learning to Solve Complex Problems via Dataset Decomposition - Wanru Zhao,Lucas Caccia,Zhengyan Shi,Minseon Kim,Xingdi Yuan,Weijia Xu,Marc-Alexandre Côté,Alessandro Sordoni

- Discrete Feynman-Kac Correctors - Mohsin Hasan,Marta Skreta,Alan Aspuru-Guzik,Yoshua Bengio,Kirill Neklyudov

- Instilling Parallel Reasoning into Language Models - Matthew Macfarlane,Minseon Kim,Nebojsa Jojic,Weijia Xu,Lucas Caccia,Xingdi Yuan,Wanru Zhao,Zhengyan Shi,Alessandro Sordoni

- HVAC-GRACE: Transferable Building Control via Heterogeneous Graph Neural Network Policies - Anaïs Berkes,Donna Vakalis,David Rolnick,Yoshua Bengio

- Landscape of Thoughts: Visualizing the Reasoning Process of Large Language Models - Zhanke Zhou,Zhaocheng Zhu,Xuan Li,Mikhail Galkin,Xiao Feng,Sanmi Koyejo,Jian Tang,Bo Han

- Robust and Interpretable Relational Reasoning with Large Language Models and Symbolic Solvers - Ge Zhang,Mohammad Alomrani,Hongjian Gu,Jiaming Zhou,Yaochen Hu,Bin Wang,Qun Liu,Mark J. Coates,Yingxue Zhang,Jianye HAO

- Circuit Discovery Helps To Detect LLM Jailbreaking - Paria Mehrbod,Boris Knyazev,Eugene Belilovsky,Guy Wolf,geraldin nanfack

- Exploration by Exploitation: Curriculum Learning for Reinforcement Learning Agents through Competence-Based Curriculum Policy Search - Tabitha Edith Lee,Nan Rosemary Ke,Sarvesh Patil,Annya Dahmani,Eunice Yiu,Esra'a Saleh,Alison Gopnik,Oliver Kroemer,Glen Berseth

- Amortized Sampling with Transferable Normalizing Flows - Charlie B. Tan,Majdi Hassan,Leon Klein,Saifuddin Syed,Dominique Beaini,Michael M. Bronstein,Alexander Tong,Kirill Neklyudov

- Learning What Matters: Prioritized Concept Learning via Relative Error-driven Sample Selection - Shivam Chandhok,Qian Yang,Oscar Mañas,Kanishk Jain,Leonid Sigal,Aishwarya Pravin Agrawal

- Next-Token Prediction Should be Ambiguity-Sensitive : A Meta-Learing Perspective - Leo Gagnon,Eric Elmoznino,Sarthak Mittal,Tom Marty,Tejas Kasetty,Dhanya Sridhar,Guillaume Lajoie

- Progressive Inference-Time Annealing of Diffusion Models for Sampling from Boltzmann Densities - Tara Akhound-Sadegh,Jungyoon Lee,Avishek Joey Bose,Valentin De Bortoli,Arnaud Doucet,Michael M. Bronstein,Dominique Beaini,Siamak Ravanbakhsh,Kirill Neklyudov,Alexander Tong

- MuLoCo: Muon is a practical inner optimizer for DiLoCo - Benjamin Thérien,Xiaolong Huang,Irina Rish,Eugene Belilovsky

- LLMs for Experiment Design in Scientific Domains: Are We There Yet? - Rushil Gupta,Jason Hartford,Bang Liu

- Mixture-of-Recursions: Learning Dynamic Recursive Depths for Adaptive Token-Level Thinking - Sangmin Bae,Yujin Kim,Reza Bayat,Sungnyun Kim,Jiyoun Ha,Tal Schuster,Adam Fisch,Hrayr Harutyunyan,Ziwei Ji,Aaron C. Courville,Se-Young Yun

- Model Parallelism With Subnetwork Data Parallelism - Vaibhav Singh,Zafir Khalid,Eugene Belilovsky,Edouard Oyallon

- FORT: Forward-Only Regression Training of Normalizing Flows - Danyal Rehman,Oscar Davis,Jiarui Lu,Jian Tang 0009,Michael M. Bronstein,Yoshua Bengio,Alexander Tong,Avishek Joey Bose

- NovoMolGen: Rethinking Molecular Language Model Pretraining - Kamran Chitsaz,Roshan Balaji,Quentin Fournier,Nirav Pravinbhai Bhatt,A. Chandar

- Beyond Cosine Decay: On the effectiveness of Infinite Learning Rate Schedule for Continual Pre-training - Vaibhav Singh,Paul Janson,Paria Mehrbod,Adam Ibrahim,Irina Rish,Eugene Belilovsky,Benjamin Thérien

- Torsional-GFN: a conditional conformation generator for small molecules - Lena Nehale Ezzine,Alexandra Volokhova,Piotr Gaiński,Luca Scimeca,Emmanuel Bengio,Prudencio Tossou,Yoshua Bengio,Alex Hernandez-Garcia

- Overcoming Long-Context Limitations of State-Space Models via Context-Dependent Sparse Attention - Zhihao Zhan,Jianan Zhao,Zhaocheng Zhu,Jian Tang

- CulturalFrames: Assessing Cultural Expectation Alignment in Text-to-Image Models and Evaluation Metrics - Shravan Nayak,Mehar Bhatia,Xiaofeng Zhang,Verena Rieser,Lisa Anne Hendricks,Sjoerd van Steenkiste,Yash Goyal,Karolina Stanczak,Aishwarya Pravin Agrawal

- What Matters when Modeling Human Behavior using Imitation Learning? - Aneri Muni,Esther Derman,Vincent Taboga,Pierre-Luc Bacon,Erick Delage

- Language models’ activations linearly encode training-order recency - Dmitrii Krasheninnikov,Richard E. Turner,David Krueger

- Robust Reward Modeling via Causal Rubrics - Pragya Srivastava,Harman Singh,Rahul Madhavan,Gandharv Patil,Sravanti Addepalli,Arun Suggala,Rengarajan Aravamudhan,Soumya Sharma,Anirban Laha,Aravindan Raghuveer,Karthikeyan Shanmugam,Doina Precup

- Low-Rank Adaptation Secretly Imitates Differentially Private SGD - Saber Malekmohammadi,Golnoosh Farnadi

- Parity Requires Unified Input Dependence and Negative Eigenvalues in SSMs - Behnoush Khavari,Jayesh Khullar,Mehran Shakerinava,Jerry Huang,Siamak Ravanbakhsh,A. Chandar

- Test Time Adaptation Using Adaptive Quantile Recalibration - Paria Mehrbod,Pedro Vianna,geraldin nanfack,Guy Wolf,Eugene Belilovsky

- Geometry-Aware Preference Learning for 3D Texture Generation - AmirHossein Zamani,Tianhao Xie,Amir Aghdam,Tiberiu Popa,Eugene Belilovsky

- Deploying Geospatial Foundation Models in the Real World: Lessons from WorldCereal - Christina Butsko,Gabriel Tseng,Kristof Van Tricht,Giorgia Milli,David Rolnick,Ruben Cartuyvels,Inbal Becker Reshef,Zoltan Szantoi,Hannah Kerner

- Diffusion Tree Sampling: Scalable inference-time alignment of diffusion models - Vineet Jain,Kusha Sareen,Mohammad Pedramfar,Siamak Ravanbakhsh

- TGM: A Modular Framework for Machine Learning on Temporal Graphs - Jacob Chmura,Shenyang Huang,Ali Parviz,Farimah Poursafaei,Michael M. Bronstein,Guillaume Rabusseau,Matthias Fey,Reihaneh Rabbany

- Two-point deterministic equivalence for SGD in random feature models - Alexander Atanasov,Blake Bordelon,Jacob A Zavatone-Veth,Courtney Paquette,Cengiz Pehlevan

- A Meta-Learning Approach to Causal Inference - Dragos Cristian Manta,Philippe Brouillard,Dhanya Sridhar

- Tracing the representation geometry of language models from pretraining to post-training - Melody Zixuan Li,Kumar Krishna Agrawal,Arna Ghosh,Komal Kumar Teru,Guillaume Lajoie,Blake Aaron Richards

- Towards Fair In-Context Learning with Tabular Foundation Models - Patrik Joslin Kenfack,Samira Ebrahimi Kahou,Ulrich Matchi Aïvodji

- Meta-World+: An Improved, Standardized, RL Benchmark - Reginald McLean,Evangelos Chatzaroulas,Luc McCutcheon,Frank Röder,Tianhe Yu,Zhanpeng He,K.R. Zentner,Ryan Julian,J K Terry,Isaac Woungang,Nariman Farsad,Pablo Castro

- Revisiting the Goldilocks Zone in Inhomogeneous Networks - Zacharie Garnier Cuchet,A. Chandar,Ekaterina Lobacheva

- PyLO: Towards Accessible Learned Optimizers in PyTorch - Paul Janson,Benjamin Thérien,Quentin Gregory Anthony,Xiaolong Huang,Abhinav Moudgil,Eugene Belilovsky

- AIF-GEN: Open-Source Platform and Synthetic Dataset Suite for Reinforcement Learning on Large Language Models - Jacob Chmura,Shahrad Mohammadzadeh,Ivan Anokhin,Jacob-Junqi Tian,Mandana Samiei,Taz Scott-Talib,Irina Rish,Doina Precup,Reihaneh Rabbany,Nishanth Anand

- Quantized Disentanglement: A Practical Approach - Vitória Barin-Pacela,Kartik Ahuja,Simon Lacoste-Julien,Pascal Vincent

- Improving Context Fidelity via Native Retrieval-Augmented Reasoning - Suyuchen Wang,Jinlin Wang,Xinyu Wang,Shiqi Li,Xiangru Tang,Sirui Hong,Xiao-Wen Chang,Chenglin Wu,Bang Liu

- DoomArena: A framework for Testing AI Agents Against Evolving Security Threats - Léo Boisvert,Abhay Puri,Gabriel Huang,Mihir Bansal,Chandra Kiran Reddy Evuru,Avinandan Bose,Maryam Fazel,Quentin Cappart,Alexandre Lacoste,Jason Stanley,Alexandre Drouin,Krishnamurthy Dj Dvijotham

- How to Train Your LLM Web Agent: A Statistical Diagnosis - Dheeraj Vattikonda,Santhoshi Ravichandran,Emiliano Penaloza,Hadi Nekoei,Megh Thakkar,Thibault Le Sellier de Chezelles,Nicolas Gontier,Miguel Muñoz-Mármol,Sahar Omidi Shayegan,Stefania Raimondo,Xue Liu,Alexandre Drouin,Laurent Charlin,Alexandre Piché,Alexandre Lacoste,Massimo Caccia

- Silent Sabotage: Injecting Backdoors into AI Agents Through Fine-Tuning - Léo Boisvert,Abhay Puri,Chandra Kiran Reddy Evuru,Joshua Kazdan,Avinandan Bose,Quentin Cappart,Maryam Fazel,Sai Rajeswar,Jason Stanley,Nicolas Chapados,Alexandre Drouin,Krishnamurthy Dj Dvijotham

Présentations orales

Trois articles ont également fait l'objet de présentations orales :

- Improving the Scaling Laws of Synthetic Data with Deliberate Practice, par Reyhane Askari Hemmat, Mohammad Pezeshki, Elvis Dopgima Dohmatob, Florian Bordes, Pietro Astolfi, Melissa Hall, Jakob Verbeek, Michal Drozdzal et Adriana Romero

- Position: Probabilistic Modelling is Sufficient for Causal Inference, par Bruno Mlodozeniec, David Krueger et Richard E. Turner

- Network Sparsity Unlocks the Scaling Potential of Deep Reinforcement Learning, par Guozheng Ma, Lu Liu, Zilin Wang, Li Shen, Pierre-Luc Bacon et Dacheng Tao