A collaboration between Mila and IBM for the development of Oríon

Hyperparameter optimization (HPO) procedures are crucial for learning algorithms to achieve the best performance and essential to compare them on equal footing (Kadlec, et al., 2017, Lucic, et al., 2018 & Melis, et al., 2017). Yet, a survey conducted by Bouthillier et al., 2020 at two of the most distinguished conferences in machine learning (NeurIPS 2019 and ICLR 2020) demonstrates that the majority of researchers opt for manual tuning and/or rudimentary algorithms rather than automated hyperparameter optimization tools, thus missing out on improved deep learning workflows.

Additionally, Staff at Mila – Quebec Artificial Intelligence Institute surveyed its researchers to investigate why HPO was not being utilized. The following three main concerns were suggested as the reasons why researchers felt disincentivized from adopting HPO:

- HPO tools at the time of the survey were said to be too complicated, outweighing the possible benefits

- Mistrust of HPO’s efficiency, believing they can do better with manual tuning

- Lack of computational resources to conduct HPO

To address these concerns, Mila and IBM have joined forces to make improvements to the open-source Oríon (Bouthillier et al. 2020) software project, a black-box function optimization library originally developed at Mila. The goals of this project are to 1) create a tool well-adapted to researchers’ workflow and with little configuration/manipulation required, 2) establish clear benchmarks to convince researchers of HPO’s efficiency, and 3) leverage prior knowledge to avoid optimization from scratch. Below, we provide a brief overview of Oríon and demonstrate how researchers can apply it to their work.

Introduction to Oríon

Oríon is a black-box function optimization library with a key focus on usability and integrability for its users. As a researcher, you can integrate Oríon to your current workflow to tune your models but you can also use Oríon to develop new optimization algorithms and benchmark them with other algorithms in the same context and conditions.

Since Oríon essentially focuses on black-box optimization, it does not aim to be a machine learning framework or pipeline, or an automatic machine learning tool (AutoML, Hutter et al. 2019). However, we do encourage developers to integrate Oríon into that kind of system as a component.

IBM and Mila have been closely collaborating since early 2020 to drive Oríon forward and make the technology more accessible to researchers. The following section outlines a brief usage example with sklearn to introduce Oríon in order to help researchers understand its implementation process and advantages.

Usage Example

In this usage example, we demonstrate how to adapt existing scripts to Oríon, execute hyperparameter optimization with Oríon, and how to monitor experiments.

Let’s begin with the core of the example. Consider the following short script to train a MLP classifier on the dataset IRIS.

import argparse

from sklearn.datasets import load_iris

from sklearn.neural_network import MLPClassifier

from sklearn.model_selection import train_test_split

# Parsing the value for the hyper-parameter 'learning_rate'

# given as a command line argument.

parser = argparse.ArgumentParser()

parser.add_argument('--lr', type=float)

inputs = parser.parse_args()

# Loading the iris dataset and splitting it into training and testing sets.

X, y = load_iris(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.3, random_state=1)

# Training the model with the training set with the specified hyperparameters.

clf = MLPClassifier(learning_rate_init=inputs.lr)

clf.fit(X_train, y_train)

# Evaluating the accuracy using the testing set.

accuracy = clf.score(X_test, y_test)

print(accuracy)In this script, we first use argparse to handle the command line argument –lr, the learning rate used to optimize the MLP. We then load the dataset IRIS and divide it into a training set and a test set. We train the MLP on the training set and then evaluate its accuracy on the test set. The script can be executed with the following command line.

$ python blog.py --lr 0.01This will load the dataset, train the MLP with a learning rate of 0.01, evaluate the accuracy of the MLP on the test set, and print the accuracy. To optimize the learning rate hyperparameter, we need to add the two following lines at the end of the script to report the results to Oríon.

from orion.client import report_objective

report_objective(1 - accuracy)To execute the script with Oríon, we need to call orion hunt <your command>, where <your command> in this case would be python blog.py –lr 0.01. It is best to always use the –debug mode when first testing. This forces Oríon to use a non-persistent in-memory database, requiring no configuration and avoiding clutter in the database. Experiments need to be identified with a name. For the sake of this example, we will pass the name ‘test’ with -n test. To limit the time execution, we will set the maximum number of hyperparameter values to try to 10, using argument –exp-max-trials 10. Finally, we will replace the learning rate –lr 0.01 with –lr~’loguniform(0.0001, 0.1)’. This signals Oríon that values for the learning rate should be explored in a log-uniform distribution between 0.0001 and 0.1.

$ orion --debug hunt -n test --exp-max-trials 10 \

python blog.py --lr~'loguniform(0.0001, 0.1)'This command line call will spawn a Worker in Oríon, executing the hyperparameter optimization up to 10 trials before printing the final statistics.

This example with –debug does not preserve the results in the database. For persistent results, we need to configure a database. Fortunately, Oríon comes with a native database called PickledDB that is very simple to set up, requiring only a file name. Let’s save our configuration in a file called blog.yaml.

storage:

database:

type:'pickleddb'

host:'blog.pkl'

experiment:

name:'blog-example'

max_trials: 10In this file, we configure the database to use the ‘pickleddb’ backend with the database being saved at ‘blog.pkl’. We take this opportunity to add the experiment name and the maximum number of trials in the configuration file to simplify the command line call. You could however define only the database in the configuration file and pass other options using command line arguments if you prefer. Hybrid configurations are supported, with command line arguments having precedence over configuration file options. Oríon can be executed with this configuration file using the following command line call.

$ orion hunt --config blog.yaml blog.py --lr~'loguniform(0.0001, 0.1)'Using a persistent database has another advantage: you can now scale your hyperparameter optimization with multiple workers in parallel. Running the same command line call multiple times will spawn as many workers, each connecting to the database to synchronize the parallel hyperparameter optimization. For example, to spawn 4 workers:

$ orion hunt --config blog.yaml python blog.py --lr~'loguniform(0.0001, 0.1)' &

$ orion hunt --config blog.yaml python blog.py --lr~'loguniform(0.0001, 0.1)' &

$ orion hunt --config blog.yaml python blog.py --lr~'loguniform(0.0001, 0.1)' &

$ orion hunt --config blog.yaml python blog.py --lr~'loguniform(0.0001, 0.1)'See our tutorial to spawn larger pools of workers on clusters.

Additional Features

There are many more features in Oríon. In this article, we only scratched the surface of its many possibilities. See our detailed documentation for an in-depth view of all of its capabilities. In summary:

- Hyperparameter distributions in the search space can be real or discrete, or a list of choices. See our documentation on priors for more information.

- In our example, we defined the priors using the command line, but they can also be defined using a configuration file.

- Oríon can be used with scripts of any programming language, not only python. It is language agnostic.

- There is a Python API available in addition to the command line tools presented here.

- By default, Oríon will use the random search algorithm. There are however several other algorithms available in Oríon, such as Hyperband/ASHA, TPE, EvolutionaryES, and Bayesian Optimization.

- Oríon provides three basic commands status, info, and list to monitor experiments, in addition to a python API and a REST server.

- Experiments are versioned which enables better tracking of results.

- A cookiecutter is available to bootstrap algorithm plugin implementation in Oríon.

Recent Developments and Current Work

Researchers at Mila and IBM have been focusing on three main project goals, as presented below and discussed further in the following subsections:

- Implementation of state-of-the-art algorithms and benchmark

- Integration of visualization tools and dashboard

- Further research on transfer learning for hyperparameter optimization

New Algorithms and Benchmark

In the first phase of the collaboration, Mila and IBM have already integrated additional existing state-of-the-art hyperparameter optimization algorithms into Oríon to provide an expansive set of efficient algorithms. These new algorithms, presented below, are presently available for use in Oríon.

Algorithms

Hyperband (Li et al, 2017) (doc)

This algorithm leverages partial information during training, for instance, validation objectives at each epoch, to speed up hyperparameter optimization by reallocating resources to the most promising configurations.

Tree-structured Parzen Estimator (TPE) (Bergstra et al, 2011) (doc)

This variant of Bayesian optimization enables seamless parallelization and lower computational complexity. It partitions the search space into two groups, the top-k best-performing configurations in one group and the rest in another group, and then models each group with a separate Parzen estimator. The parzen estimator of the best group serves as a generator to sample new candidates to try, and the ratio of both estimators is used to filter out the best candidates.

Evolution-ES (So et al, 2019) (doc)

This evolutionary algorithm uses tournament-based selection with gradually increased resource allocation at each generation, providing faster progress on large batches of random configurations in the same spirit of Hyperband.

Coming Next

The implementation of a wrapper for the library RoBO (Klein et al 2017) will provide access to a performant but computationally expensive bayesian optimizer based on Gaussian processes with MCMC sampling, in addition to DNGO (Snoek et al 2015) and BOHAMIANN (Springenberg et al 2017).

Benchmark

To demonstrate the utility of different hyperparameter optimization algorithms, Mila and IBM are working together to build a benchmarking module in Oríon consisting of a variety of types of assessments and tasks. This variety is primordial to ensure sufficient coverage of most use-cases encountered in research.

For each task, optimization algorithms can be benchmarked based on various assessment scenarios:

- Time to result: Which of the algorithms reach a given threshold first (without parallelism)?

- Average performance: For a given (large) budget, which algorithm finds the best configuration?

- Search space dimensions size: Which algorithms perform better in small search spaces or large search spaces?

- Search space dimensions type: Which algorithm performs better on continuous or categorical dimensions?

- Parallel execution advantage: How does the performance of algorithms scale with parallelism?

- Search Algorithm parameters sensitivity (easy to use): Which algorithm is less sensitive to change to its hyperparameters?

For researchers creating new hyperparameter optimization algorithms using Oríon, we provide tools to help them assess the performance of their algorithms. Included is a set of predefined standard tasks on which to assess HPO algorithms. In addition to the computationally expensive real tasks, Oríon provides synthetic functions for quick sanity tests and emulators for cheap but relatively faithful assessments:

- Synthetic functions, e.g. Branin, rosenbrock, COCO (Hansen et al 2020)

- Emulators, e.g. PROFET (Klein et al 2019), HPOlib2 (Eggensperger et al 2013)

- Real tasks, e.g. classification, regression, MLPerf tasks (Reddi et al 2020)

Dashboard and Visualizations

Visualizations are essential to interpret the results of user experiments or benchmarks. While numerical results can convey the information required to compare the performance of algorithms, visualizations leverage the innate ability of humans for comparing, counting, and recognizing patterns. Complex ensembles of data such as groups of experiments or benchmarks cannot be interpreted easily with numbers and require graphical representations.

In Oríon, Mila and IBM have added support for both standalone visualizations, usable in Jupyter notebook for example, and a centralized dashboard providing a view on the status of experiments and analysis of results. A new REST server for Oríon has been developed as part of this collaboration to support the dashboard and can be used independently of the dashboard for support in third-party libraries.

Two different types of visualizations will be supported: 1) Visualizations to improve user experience; view on configuration, organization of prior experiments, monitoring status of ongoing experiments, 2) Visualizations to improve interpretability of results; regret curve of optimization, parallel coordinate plot, partial dependence plots, fANOVA bar plots and marginals curve (Hutter et al 2014) and local parameter importance (Biedenkapp et al 2018).

Efficient HPO with Warm-start

As the training of deep learning models can take several days, conducting hyperparameter optimizations requiring multiple training becomes unbearingly expensive for most researchers. Manual tuning may not be advised to attain the best algorithm performance or to provide reliable objective measures for baselines (which would result in spending days tuning the hyperparameters of a baseline), but it has the advantage of efficiency for small budgets. Researchers tuning their hyperparameters manually can re-use their prior knowledge from tuning similar algorithms on similar tasks. Leveraging this information within the hyperparameter optimization algorithms would enable more efficient optimization for small budgets.

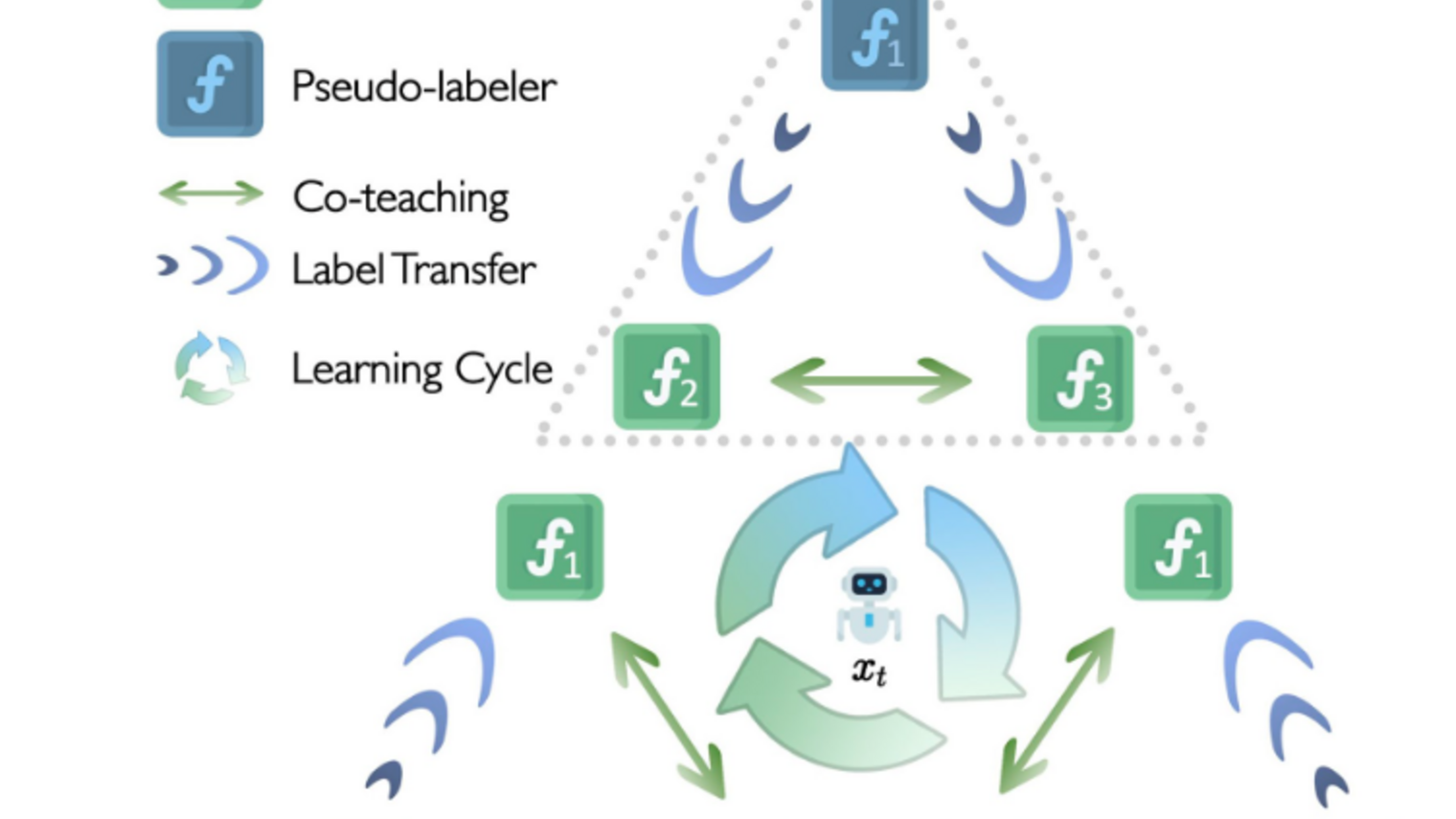

Existing work on the subject of transfer learning for hyperparameter optimization focuses on transferring knowledge from identical learning algorithms and hyperparameters across different datasets (Bardenet et al 2013, Law et al 2019, Perronne et al 2018, Salinas et al, 2019, Yogamata & Mann 2014). While a good first step, this is still very constraining in practice. The goal of the collaborative research between Mila and IBM is to generalize existing solutions to enable transfer to similar search spaces where the underlying learning algorithms and the number of hyperparameters may vary.

Oríon provides an Experiment Version Control (EVC) system that tracks previous hyperparameter optimization experiments. It serves as a versioning tool for the experiment iterations conducted by researchers, but could also serve as a prior knowledge graph that researchers can reuse to warm-start optimization of subsequent experiments. By generalizing transfer learning solutions, we will enable native warm-starting within Oríon.

Conclusion

Oríon provides researchers with a simple and effective tool for hyperparameter optimization. The collaboration between Mila and IBM is driving its development further with additional optimization algorithms, new monitoring, and new analysis tools, with the firm belief that our work is an important step towards wider adoption of hyperparameter optimization tools within the machine learning community.

Although Oríon has been developed with the main focus on usability for researchers, its flexible design also makes it a great backend to complement existing machine learning frameworks. An example of this is the potential of integrating Oríon into IBM Watson Machine Learning Accelerator, an enterprise-scale accelerated deep learning offering for model training, tuning, and inference.

For more information on Oríon, please consult our documentation.

Acknowledgements

A special thank you to all of the contributors who made this project possible!

Mila Team

PI: Irina Rish (Associate Professor Université de Montréal, Mila)

Frédéric Osterrath (Director Innovation Development and Technology (IDT), Mila)

Xavier Bouthillier (PhD Student, Deep Learning Researcher and Developer, Mila)

Thomas Schweizer (Research Developer, Mila)

Fabrice Normandin (Master Student, Université de Montréal, Mila)

Nadhir Hassen (Master Student, Université de Montréal, Mila)

Lucas Cecchi (Intern, Mila)

IBM Team

IBM Lead: Yonggang Hu (Distinguished Engineer, Spectrum Computing Group, IBM)

Lin BJ Dong (Senior Software Engineer, Spectrum Computing Group, IBM)

Chao C Xue (Staff Research Member, IBM Research)

Jun Feng Liu (Senior Technical Staff Member, Spectrum Computing Group, IBM)

Sean Wagner (Research Scientist, IBM Canada National Innovation Team, IBM)

References

Bardenet, R., Brendel, M., Kégl, B., & Sebag, M. (2013, February). Collaborative hyperparameter tuning. In International conference on machine learning (pp. 199-207).

Bergstra, J. S., Bardenet, R., Bengio, Y., & Kégl, B. (2011). Algorithms for hyper-parameter optimization. In Advances in neural information processing systems (pp. 2546-2554).

Biedenkapp, A., Marben, J., Lindauer, M., & Hutter, F. (2018, June). Cave: Configuration assessment, visualization and evaluation. In International Conference on Learning and Intelligent Optimization (pp. 115-130). Springer, Cham.

Bouthillier, X., Corneau-Tremblay, F., Schweizer, T., Tsirigotis, C., Bronzi, M., Dong, L., … Lamblin, P. (2020, July 2). Oríon (10.5281/zenodo.3478592.). Zenodo.

Bouthillier, X., & Varoquaux, G. (2020). Survey of machine-learning experimental methods at NeurIPS2019 and ICLR2020 (Doctoral dissertation, Inria Saclay Ile de France).

Eggensperger, K., Feurer, M., Hutter, F., Bergstra, J., Snoek, J., Hoos, H., & Leyton-Brown, K. (2013, December). Towards an empirical foundation for assessing bayesian optimization of hyperparameters. In NIPS workshop on Bayesian Optimization in Theory and Practice (Vol. 10, p. 3).

Hansen, N., Auger, A., Ros, R., Mersmann, O., Tušar, T., & Brockhoff, D. (2020). COCO: A platform for comparing continuous optimizers in a black-box setting. Optimization Methods and Software, 1-31.

Hutter, F., Hoos, H., & Leyton-Brown, K. (2014, January). An efficient approach for assessing hyperparameter importance. In International conference on machine learning (pp. 754-762). PMLR.

Hutter, F., Kotthoff, L., & Vanschoren, J. (2019). Automated machine learning: methods, systems, challenges (p. 219). Springer Nature.

Kadlec, R., Bajgar, O., & Kleindienst, J. (2017). Knowledge base completion: Baselines strike back. arXiv preprint arXiv:1705.10744.

Klein, A., Falkner, S., Mansur, N., & Hutter, F. (2017, December). Robo: A flexible and robust bayesian optimization framework in python. In NIPS 2017 Bayesian Optimization Workshop.

Klein, A., Dai, Z., Hutter, F., Lawrence, N., & Gonzalez, J. (2019). Meta-surrogate benchmarking for hyperparameter optimization. In Advances in Neural Information Processing Systems (pp. 6270-6280).

Law, H. C., Zhao, P., Chan, L. S., Huang, J., & Sejdinovic, D. (2019). Hyperparameter learning via distributional transfer. In Advances in Neural Information Processing Systems (pp. 6804-6815).

Li, L., Jamieson, K., DeSalvo, G., Rostamizadeh, A., & Talwalkar, A. (2017). Hyperband: A novel bandit-based approach to hyperparameter optimization. The Journal of Machine Learning Research, 18(1), 6765-6816.

Lucic, M., Kurach, K., Michalski, M., Gelly, S., & Bousquet, O. (2018). Are gans created equal? a large-scale study. In Advances in neural information processing systems (pp. 700-709).

Melis, G., Dyer, C., & Blunsom, P. (2017). On the state of the art of evaluation in neural language models. arXiv preprint arXiv:1707.05589.

Perrone, V., Jenatton, R., Seeger, M. W., & Archambeau, C. (2018). Scalable hyperparameter transfer learning. In Advances in Neural Information Processing Systems (pp. 6845-6855).

Reddi, V. J., Cheng, C., Kanter, D., Mattson, P., Schmuelling, G., Wu, C. J., … & Chukka, R. (2020, May). Mlperf inference benchmark. In 2020 ACM/IEEE 47th Annual International Symposium on Computer Architecture (ISCA) (pp. 446-459). IEEE.

Salinas, D., Shen, H., & Perrone, V. (2020) A quantile-based approach for hyperparameter transfer learning. In International Conference on Machine Learning.

Snoek, J., Rippel, O., Swersky, K., Kiros, R., Satish, N., Sundaram, N., … & Adams, R. (2015, June). Scalable bayesian optimization using deep neural networks. In International conference on machine learning (pp. 2171-2180).

Springenberg, J. T., Klein, A., Falkner, S., & Hutter, F. (2016). Bayesian optimization with robust Bayesian neural networks. In Advances in neural information processing systems (pp. 4134-4142).

So, D. R., Liang, C., & Le, Q. V. (2019). The evolved transformer. arXiv preprint arXiv:1901.11117.

Yogatama, Dani, and Gideon Mann. “Efficient transfer learning method for automatic hyperparameter tuning.” Artificial intelligence and statistics. 2014.