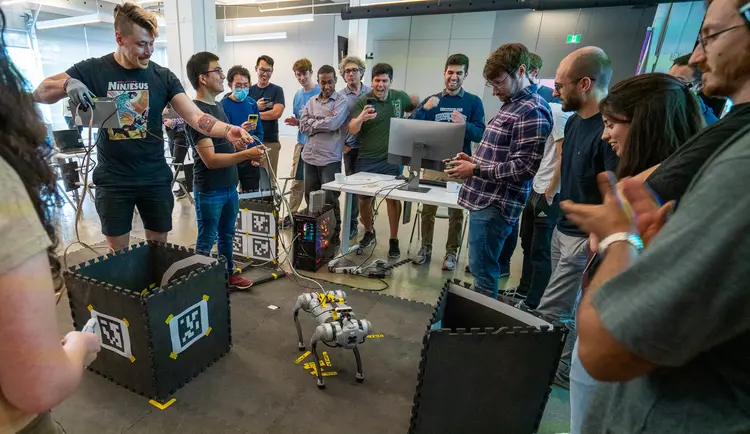

Robotics

Robots are used worldwide in many industrial processes, and are getting better at helping humans every year. Machine learning algorithms are enhancing the capabilities of traditional robotics, and have become essential in making robots more adaptable to challenging situations.