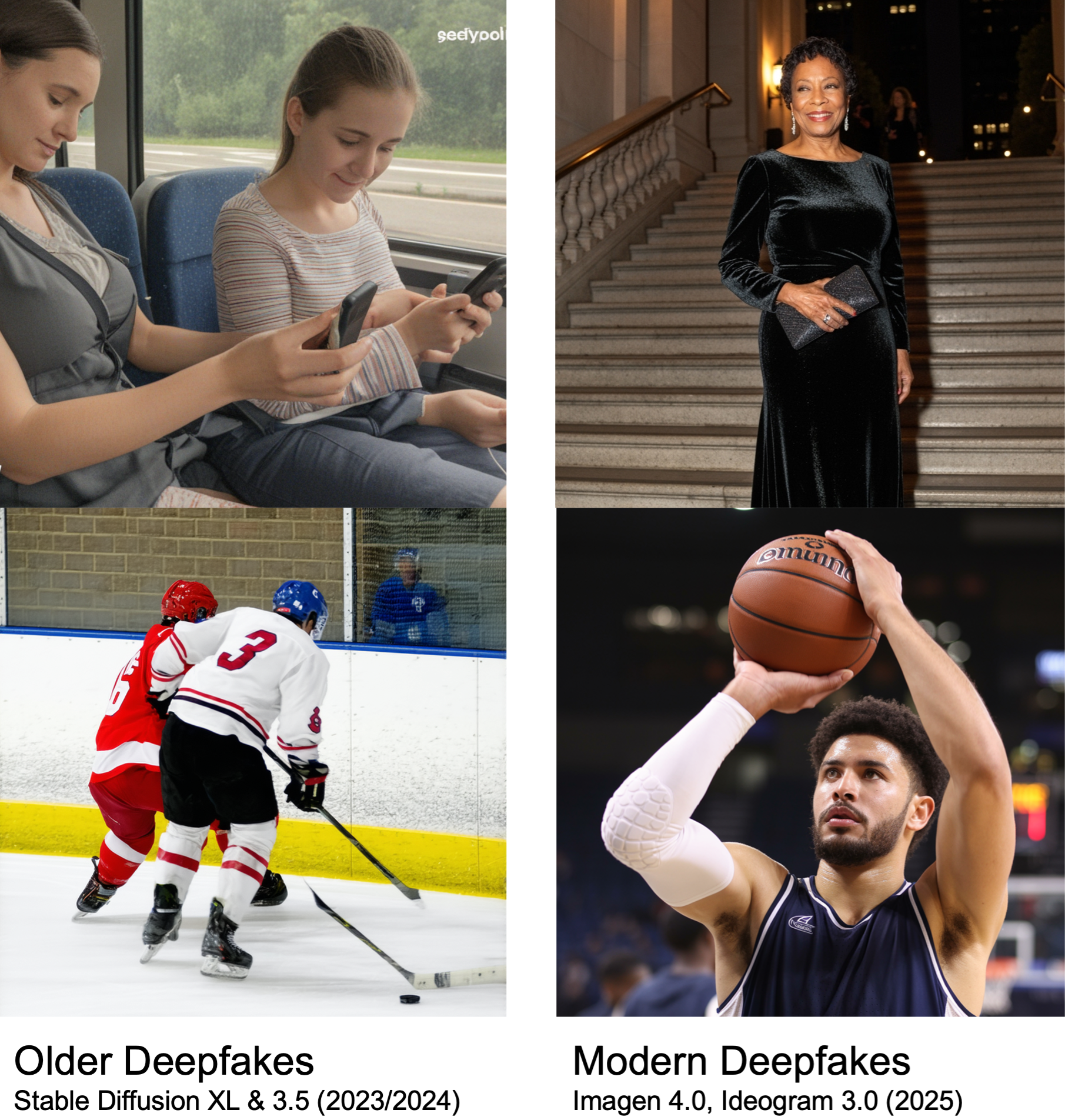

AI-generated images (or deepfakes) have become so realistic that it is now almost impossible to distinguish a fake image from a real one. Pushed by ever-evolving frontier models, modern image generation technology has surpassed the capabilities of most deepfakes detectors, leaving online users vulnerable to disinformation supercharged by hyperrealistic images.

As our capacity to distinguish a real image from an AI-generated one vanishes, our information ecosystem integrity and our shared belief in a common reality is under threat: A recent study (and our own human perception study) found that humans only detect fake images from modern models half of the time.

To address this, our new paper OpenFake: An Open Dataset and Platform Toward Real-World Deepfake Detection introduces a new dataset to train the next generation of deepfake detection tools.

Detecting the invisible

Previous AI tools could easily spot deepfakes as early generative models left glaring visual flaws such as scrambled or nonsensical text, extra fingers or teeth, distorted eyes, and other clear anatomical or structural mistakes. But most of these issues were resolved in latest models, and deepfake detection tools trained on older data are no longer able to use these artifacts to distinguish real images from modern AI-generated ones.

To tackle this issue, we needed a new dataset on which to train modern deepfake detectors that would go beyond what the naked eye can see: while humans can easily get fooled by visual realism, our method can spot tiny artifacts produced when an AI tool generates or upscales images.

An up-to-date dataset: OpenFake

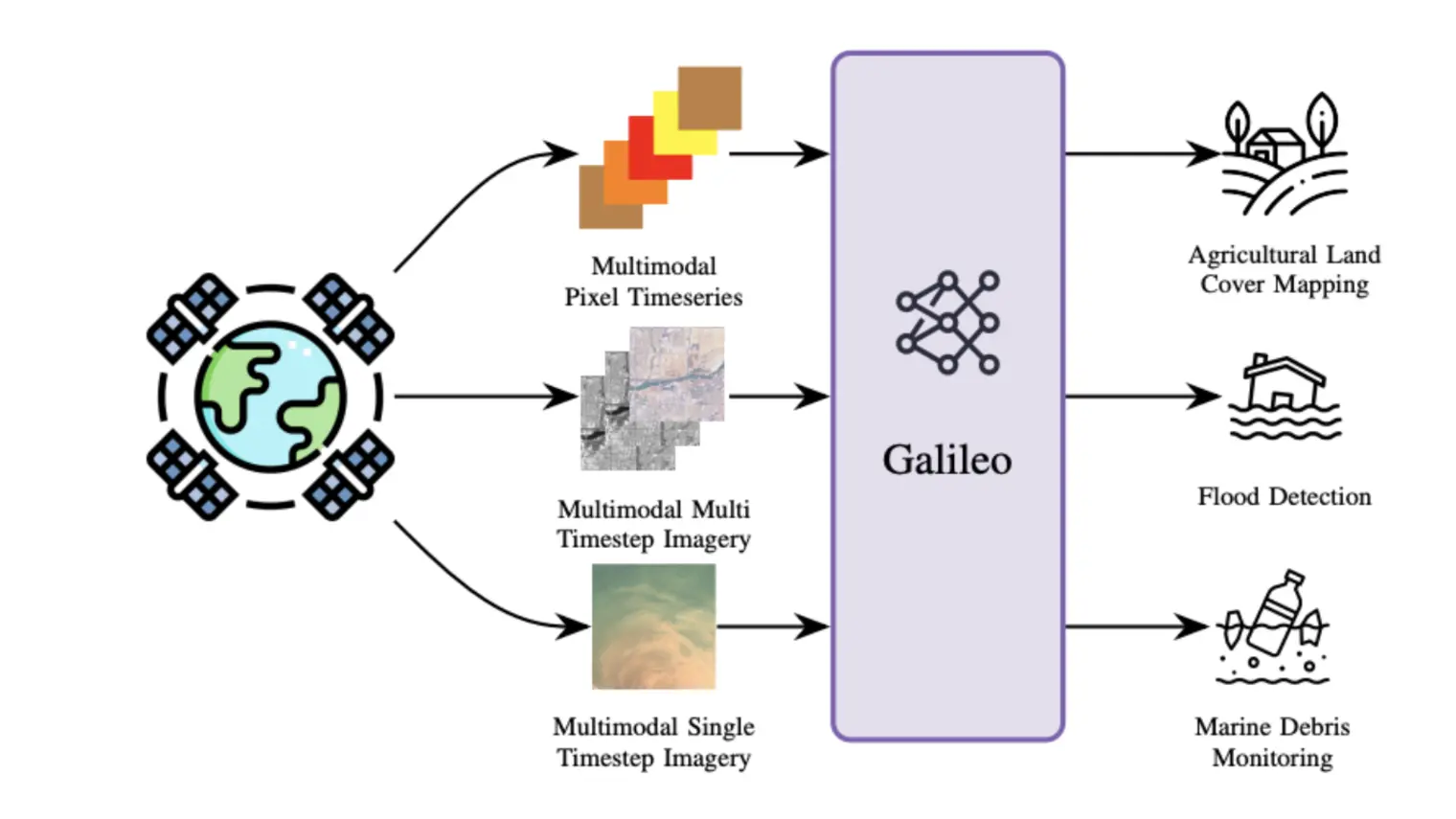

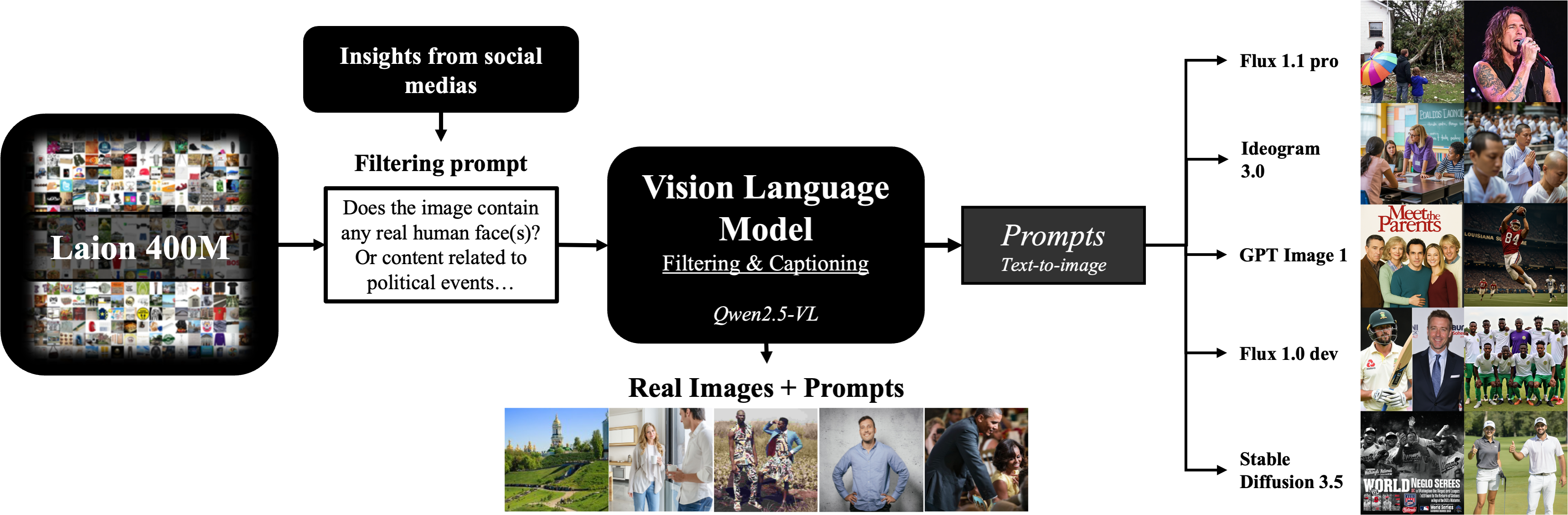

To craft the OpenFake dataset, we collected 3 million real images from the public dataset “Laion 400M”. We extracted captions from them to serve as prompts to generate 1 million synthetic counterparts using a range of modern text-to-image models. The final OpenFake dataset consists of 4 million images, each paired with its associated prompt, curated for misinformation risk and informed by patterns observed in real-world social media.

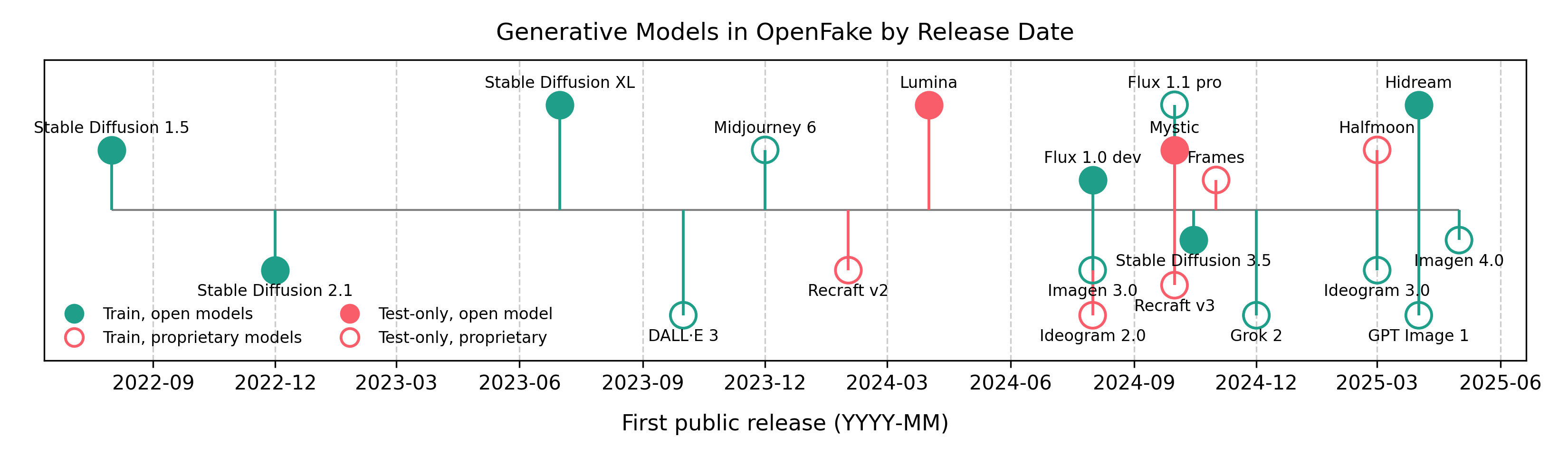

OpenFake is the first benchmark to incorporate images generated by the most recent state-of-the-art models, including OpenAI’s GPT-Image-1, Google’s Imagen-4, and Flux 1.1 Pro

Superior Real-World Generalization

To evaluate the impact of the OpenFake dataset on detection performance, we trained the same SwinV2-based deepfake detector using three different datasets: OpenFake, GenImage, and Semi-Truths. We then assessed each model on a hand-curated collection of real and synthetic images circulating on social media.

The detector trained on OpenFake performed exceptionally well, confirming our lab results. In contrast, models trained on older datasets were almost incapable of detecting a fake image generated with a modern model.

Crowdsourcing the Future of Deepfake Detection

No deepfake detection tool is futureproof: AI image generators will be updated as soon as flaws are detected, making a static dataset unsuitable for future applications.

This is why we introduced OpenFake Arena, a crowdsourced adversarial platform where users generate synthetic images to fool a live deepfake detector. Any image that successfully evades detection is added to the training dataset. In other words, whenever a user ‘beats’ the model, they simultaneously help strengthen it by contributing new challenging examples.

Users can upload any image to the Arena and receive an instant verdict from the detector, making it a practical tool for testing suspicious content in real time. We also plan to release a browser extension that will allow users to check any image on the internet with a single click.

To make the experience more engaging, the Arena includes a leaderboard that ranks participants based on their ability to create convincing synthetic images. By joining the Arena, trying to “break” the detector and climbing the leaderboard, users directly support the development of the next generation of robust deepfake defenses.