Automated Hierarchy Discovery for Planning in Partially Observable Environments

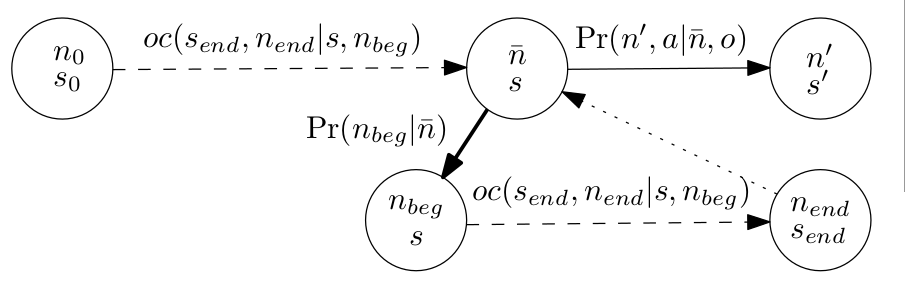

Planning in partially observable domains is a notoriously difficult problem. However, in many real-world scenarios, planning can be simplified by decomposing the task into a hierarchy of smaller planning problems. Several approaches have been proposed to optimize a policy that decomposes according to a hierarchy specified a priori. In this paper, we investigate the problem of automatically discovering the hierarchy. More precisely, we frame the optimization of a hierarchical policy as a non-convex optimization problem that can be solved with general non-linear solvers, a mixed-integer non-linear approximation or a form of bounded hierarchical policy iteration. By encoding the hierarchical structure as variables of the optimization problem, we can automatically discover a hierarchy. Our method is flexible enough to allow any parts of the hierarchy to be specified based on prior knowledge while letting the optimization discover the unknown parts. It can also discover hierarchical policies, including recursive policies, that are more compact (potentially infinitely fewer parameters) and often easier to understand given the decomposition induced by the hierarchy.

Reference

Laurent Charlin, Pascal Poupart, Romy Shioda, Automated Hierarchy Discovery for Planning in Partially Observable Environments, in: Neural Information Processing Systems (NIPS), 2006