Modeling Deep Temporal Dependencies with Recurrent “Grammar Cells”

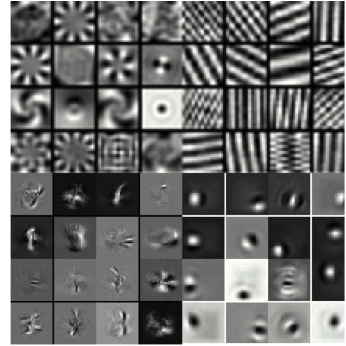

We propose modeling time series by representing the transformations that take a frame at time t to a frame at time t+1. To this end we show how a bi-linear model of transformations, such as a gated autoencoder, can be turned into a recurrent network, by training it to predict future frames from the current one and the inferred transformation using backprop-through-time. We also show how stacking multiple layers of gating units in a recurrent pyramid makes it possible to represent the ”syntax” of complicated time series, and that it can outperform standard recurrent neural networks in terms of prediction accuracy on a variety of tasks.

Reference

Vincent Michalski, Roland Memisevic, Kishore Konda, Modeling Deep Temporal Dependencies with Recurrent Grammar Cells, in: Neural Information Processing Systems (NIPS), 2014