Deep Sparse Rectifier Neural Networks

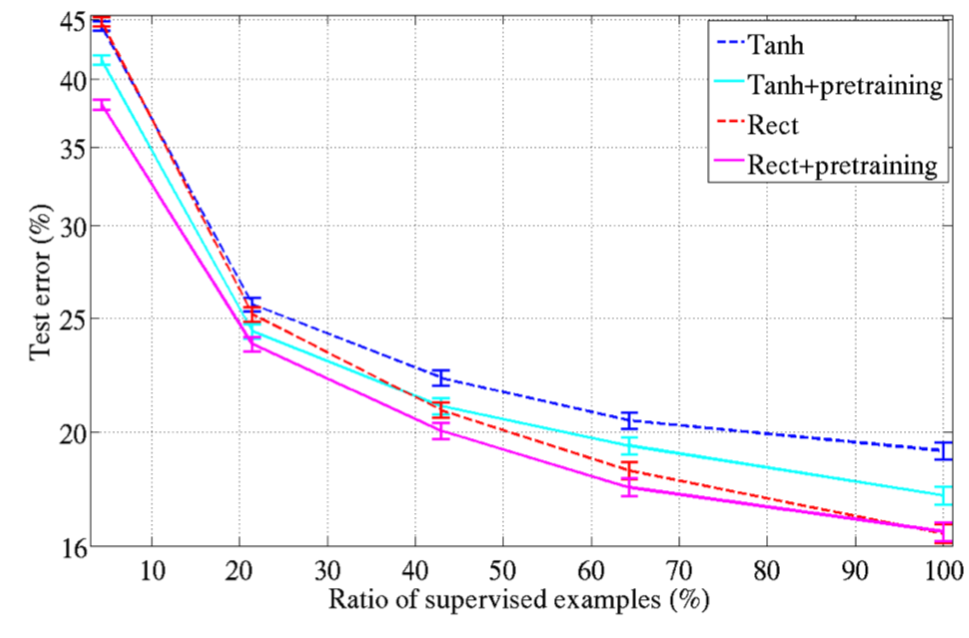

While logistic sigmoid neurons are more bi- ologically plausible than hyperbolic tangent neurons, the latter work better for train- ing multi-layer neural networks. This pa- per shows that rectifying neurons are an even better model of biological neurons and yield equal or better performance than hy- perbolic tangent networks in spite of the hard non-linearity and non-differentiability at zero, creating sparse representations with true zeros, which seem remarkably suitable for naturally sparse data. Even though they can take advantage of semi-supervised setups with extra-unlabeled data, deep rectifier net- works can reach their best performance with- out requiring any unsupervised pre-training on purely supervised tasks with large labeled datasets. Hence, these results can be seen as a new milestone in the attempts at under- standing the difficulty in training deep but purely supervised neural networks, and clos- ing the performance gap between neural net- works learnt with and without unsupervised pre-training.